|

I am a Master's student in Electrical and Computer Engineering at Georgia Tech. Previously, I was a research intern in the Physics Laboratory at ENS de Lyon, France, where I worked with Pierre Borgnat and Paulo Gonçalves on machine learning techniques for neuroscience, specifically focusing on detecting Seizure Onset Zones (SOZ) from stereo-EEG signals in epilepsy patients. This work resulted in an accepted extended abstract at the Graph Signal Processing Workshop (MILA, Montréal, May 2025), and I am currently working to publish a full paper based on this research. |

|

Research

My research focuses on developing more effective and interpretable representation learning methods, particularly for high-dimensional biomedical signals and multimodal data. I work at the intersection of contrastive learning, Transformer architectures, and domain-specific knowledge integration to solve real-world problems.

|

|

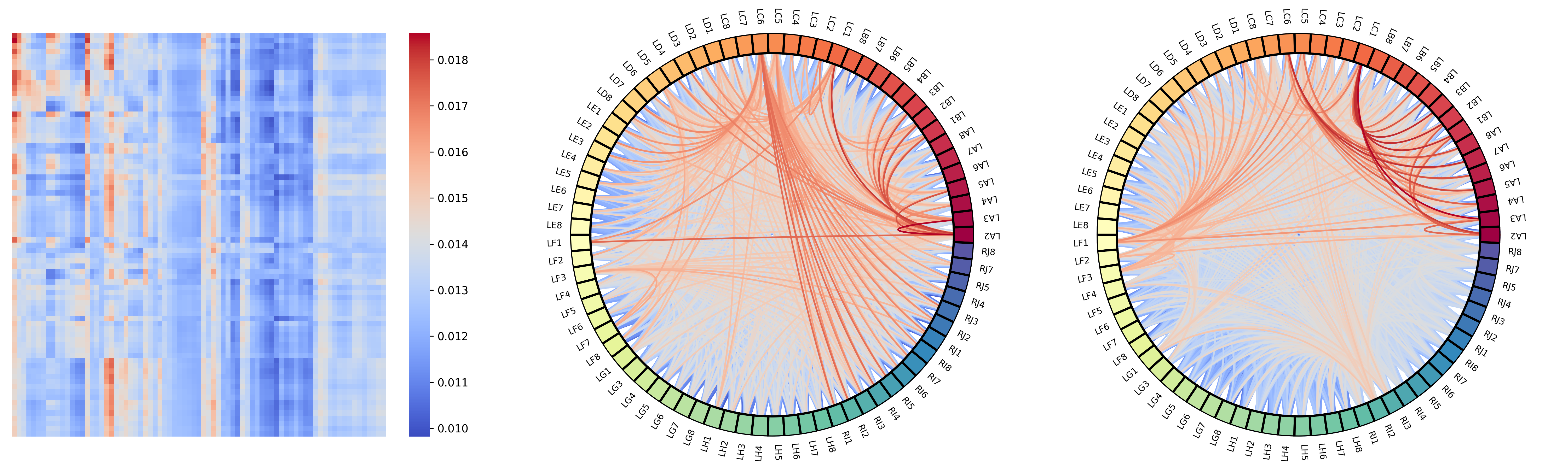

Spatial Contrastive Pre-Training of Transformer Encoders for sEEG-based Seizure Onset Zone Detection

Zacharie Rodière, Pierre Borgnat, Paulo Gonçalves Graph Signal Processing (GSP) Workshop, MILA, 2025 Workshop Page / Extended Abstract / Poster / Internship Report Leveraging clinically-informed time-frequency features and spatial contrastive pre-training within a Transformer encoder for improved Seizure Onset Zone (SOZ) localization from stereo-EEG. |

|

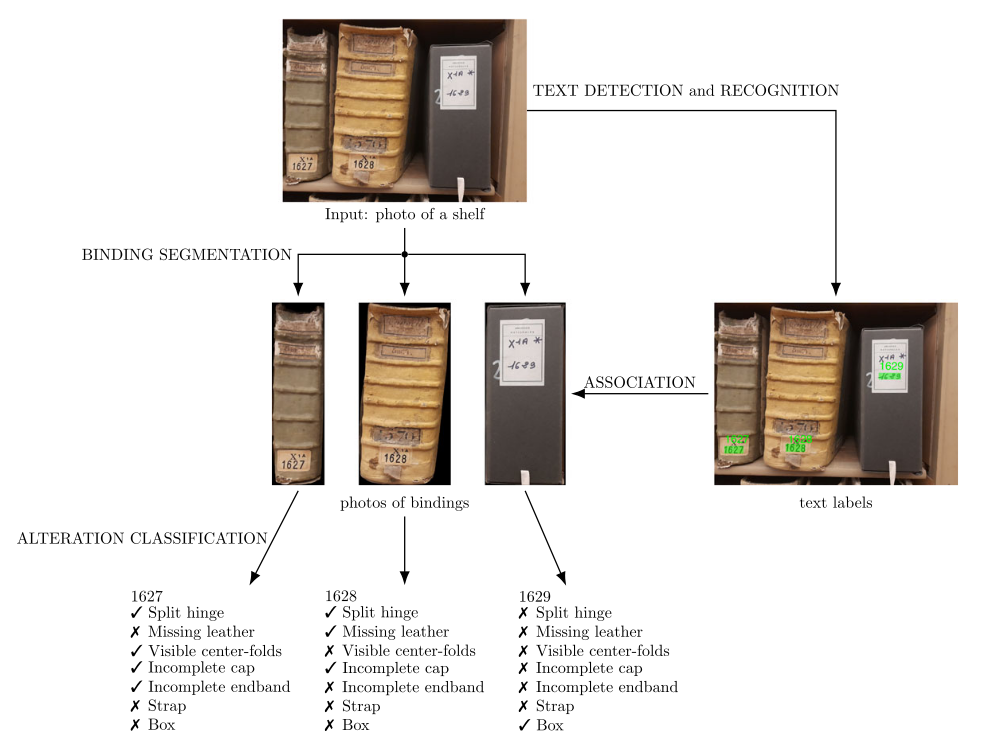

A deep learning-based pipeline for the conservation assessment of bindings in archives and libraries

Valérie Lee-Gouet, Yamoun Lahcen, Zacharie Rodière, Camille Simon Chane, Michel Jordan, Julien Longhi, David Picard Multimedia Tools and Applications, 2025 (Published Online) [DOI] Developed and evaluated a Vision Transformer-based system for multi-label classification of defects on historical book bindings to aid conservation efforts. |